So true - I recently discovered the Tess and Jo threads, among others. Brilliant!

Would it help other newer users if we suspect a random comment is from a bot to respond “Bot?”?

Possibly, though that could be a difficult endeavour to try to catch all bots (plus the risk that it is a real person, not a bot). Might be better to just counter it with an effective comment instead? One that fits the context

On a different note, I’m a little perplexed as to the purpose of the bots? Why go to the trouble to spam the beginner forums with insane posts?

Best approach is to just privately flag it to the moderators. They will always respond to it.

Yea, even if the behavior indicates strong bot like behavior it’s still circumstantial and only diminishes the likelihood of it being a real person. Doesn’t eliminate the possibility completely.

Could be a stress test to determine bot controls on the forum but the more I look into it the more it looks like some AI experiment because not all bots exhibit identical behavior.

If you look at some of the responses it appears to be pick up key words from the topic title and incorporate it into a message that fits a higher level general topic/statement. Which explains why the theme of their posts remain are relevant to the topic but are so disconnected to the specific question or flow of conversation till that point.

Worst case scenario they go off tangent and generate messages that build bad advice upon bad advice, like how it transpired in this thread.

There appear to be attempts at realistic conversations between multiple bots, like displayed in this thread. And this goes all the way back to MAR 2018.

One thing I’ve noted is not all bots exhibit the same behavior. There are notable cohorts:

-

The two already highlighted has very similar traits to 4 other accounts I sent to the mods.

-

There are two accounts were also created early AUG 2020, but have links posted and replies to other members even, which the previous accounts didn’t have.

-

Just came across another cohort. Accounts created in APR 2017. These have additional characteristics in that they have tried liking and replying to each other’s posts. This cohort consists of 5 identified accounts so far and has not yet been sent to the mods.

-

There is another possible cohort from DEC 2020 I made a mental note of a while back (24DEC20 join date I believe). Brushed it off at the time because I thought I was being paranoid.

Could be a harmless AI experiment on the best case scenario but, at worst, could be more nefarious if it’s trying to exploit forum security protocols.

Wow, you really looked into this! I’ll be sure to keep an eye out for these types of posts from now on as well.

Hi @darthdimsky

I just identified 5 or more just in random replies to new posts. The culprit account name is OronzoCapon. If the account holders wishes to comment in response that it is a real person, great. If not it is one for moderators to take a look at.

Thanks for initiating this thread.

Wow those threads!

Great work digging into this and identifying the problem. When looked at together I think you are right re someone is using babypips as an AI bot training ground. Yikes

Thanks for flagging this!

I have also noticed a lot of bots here and it certainly does derail and muddy quite a lot of threads!

My own hypothesis is they use the bots to comment and like each other to build up their profiles and website trust levels. Then down the line they can sell the accounts to shady training course providers or brokers to advertise their services. That way it looks like a long time and reputable member of BabyPips is recommending a particular company and can be trusted. I could be way off, but who knows!

This is highly plausible I think. And coincidentally might explain the more advanced nature of the cohort I suspect I found and just forwarded to the mods.

These two engage in conversations with members but are still terrible in their canned responses. They still create posts that can be vastly different from the topic thread but go undetected because we assume either a language barrier issue or a really bad newbie. One example is this post that’s strictly related to a coding problem. If not a bot, then why an individual (claiming to have some experience trading) would want to get get involved with generic comments is mindboggling. And this is frequent.

On another note I’d also found earlier members make notes of these changes in sporadic posts back in 2018/19 while trawling through old threads. So there is evidence of folks picking up on cues from way back.

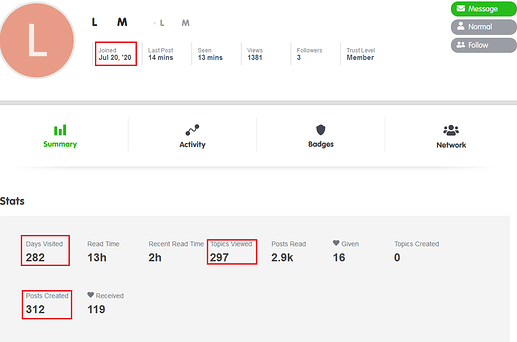

Profiles:

Example of member engagement:

This is an amazing revelation - and not a pleasant one either! I am seriously concerned that I have been wasting a large amount of my time talking to bots!

I have often wondered why some new accounts ask a question, get a series of responses, and never reply. Now I am beginning to wonder… I don’t like this at all! But I think you have done a good job in raising these cases. Each (genuine) poster needs to decide how they are going to deal with this - I know what I am going to do…

This might be a naturally high attrition rate from the whole COVID thing. I suspect there’s a lot of new member registrations in the time of crisis’ but no/low engagement beyond that first post. Desperate times breeding unrealistic dreams of getting rich via trading.

The suspected bots though, even if they don’t reply to someone asking them anything, continue to engage with usually irrelevant advice in other topics periodically. So the behavior is markedly different in this instance.

That’s bugged me so much too because there would be in depth replies like crazy detailed but then the OP won’t ever reply back

Sometimes I think they work for shady brokers. Or whatever product it is they’re trying to shill. Idk why they’d start threads though, makes no sense,

Have you heard of GPT3? I’ve often wondered if who I’m talking to online (including here) is actually GPT3-written.

No, first I’ve heard of GPT3. Thanks for the heads up. Always good to read up on what’s happening in these spaces.

My ex-workplace was in the process of adopting IBM Watson (corporate AI solution) a while back but that’s the most I know of any AI solutions.

I think a lot of this is/was COVID related. Lots of new accounts, the user wants to get involved, asks a question, the further they get into learning they realized it’s not an easy lotto ticket sized win. They go bye bye.

Many use cases for it. I’m already seeing AI powered newsletters, news articles etc. But, I’m not sure what benefit an online forum-replying bot would have.

Thought I’d update this in the interest of transparency.

Got messaged from the mods a few days back saying that their investigation concluded the actions of the accounts could be attributed to real human beings. Specifically:

What we’ve observed are actions and system indicators that support these accounts as being real human beings. The accounts are asking questions, engaging directly with other specific messages, referencing and making unique thoughts in relation to statement made by others, and actually interacting with the forums platform at a level that we think would be extremely difficult for a bot to do - direct replies, likes, actually reading, etc…

I replied with an excerpt of an exchange that didn’t appear human on my part. But I’m no expert in AI behavior nor do I have the resources to do the kind of full fledged investigation to prove/disprove anything beyond reasonable doubt.

I’ve decided I’ll challenge any bot like opinions I find, in a civil manner, and ask to explain their POV further. If there are humans at the end of these accounts they should be able to express themselves better than the canned responses I’ve personally seen so far.

Not as much time as I have wasted as a project manager talking to subject matter experts who I wished were bots (coz the bots would have more knowledge)